In the last few days, news (fake or real) has become a focus thanks to a little event we call the presidential election.

The impact that social media had in the outcome is beginning to come to light, especially as it relates to the distribution of fake news stories.

You may be reading this wondering, “Why isn’t more being done about this?” The truth is handling the situation is a pretty tricky line to walk. Much of the focus has been on just how different liberal and conservative people saw content. So, why is this?

We can break what you see in your news feed down into two categories:

- Organic News Feed posts

- Paid News Feed posts (advertising)

Let’s start with organic posts.

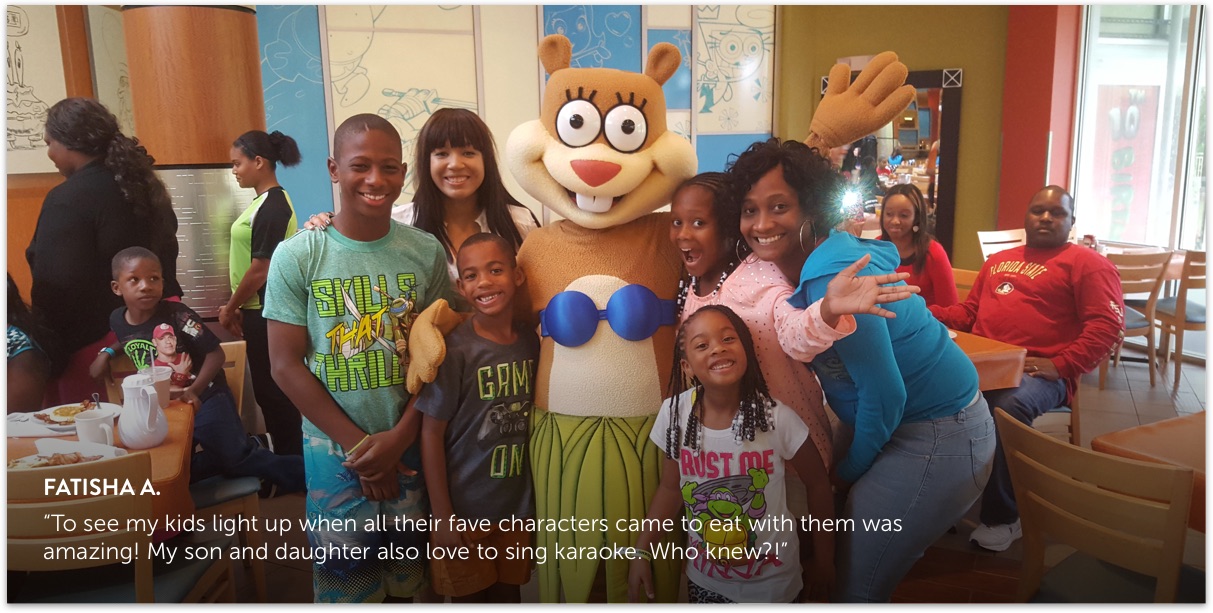

You may have heard a reference to the echo chamber effect. This is something we at Flip.to are very familiar with. It’s the core reason advocacy is an effective means to influence travelers. Let’s look at why.

Social Media sites like Facebook allow users to self-select friends and sites they find interesting. The result? We’re friends with and follow sites that have strong similarities with our own views and backgrounds. Naturally, we’re presented with content in our news feed from these sources that is very narrow in scope and tends to be in line with what we already believe.

This is not algorithmic witchcraft. It’s actually common sense. We collect friends along the way, and we’re friends with those people because of some like-minded similarities.

Unlike real life, social media allows us to stop following friends who post things we don’t like. People are more likely to do this on social sites than they are during live in-person interactions.

Why? The other person generally has no idea you stopped following them (so we don’t feel so bad doing it). So in our social media lives as least, this creates a laser-focused set of people with similar beliefs and interests continuously feeding related content to each other.

It falls into an ethical gray area to ask companies like Facebook or Google to intervene with content shared organically. This quickly gets into big-brother-type censorship that (left or right)—we all want to avoid.

It falls into an ethical gray area to ask companies like Facebook or Google to intervene with content shared organically. This quickly gets into big-brother-type censorship that (left or right)—we all want to avoid. We’re all responsible for understanding that just because something is on the internet, it’s not necessarily based on fact.

Now let’s switch gears to paid News Feed posts, or in other words—advertising.

Here is where companies like Facebook and Google can make an impact. They’ve created an environment where advertisers target audiences through very specific data points. (Say, things we’ve liked or searched for.) This puts content in front of us that we are likely to find relevant or positive.

This actually makes the bubble we live in even smaller since we’re only exposed to paid content that is based on our own self-selection and is likely to appeal to us. Coupled with the content we’re already seeing organically as explained above, and—well you get the picture.

Moderating these posts will not be an easy task. Any action in restricting advertisers due to content will be a challenging balancing act. Lets face it, most news these days has a strong amount of point of view or opinion interlaced with facts. So who is to judge what is real news and fake news?

You can actually see this firsthand by looking at this article by the Wall Street Journal. It illustrates the stark contrast of how information on the same topic is presented by different sources to different audiences. It’s easy to see the side-by-side difference between News Feed articles shared by different “blue” and “red” followers. Depicted posts are sorted based on if they are shared more by those with liberal or conservative behaviors.

Facebook has stepped up to the task, announcing several steps they’re testing to battle misinformation both to provide more accurate information as well as protect the integrity of the platform. Facebook’s CEO outlined a plan of stronger detection, news verification and user warnings in an announcement made on his personal Facebook page:

So what does all of this mean to a hotel? Opportunity!

For one thing, we’ve learned from the echo chamber effect that you should speak to the friends & family of your guests the same way you speak to your existing guests. They share similar social and economic status, making them a targeted audience who are likely to make similar decisions (like booking your hotel). Do this right and ultimately it will lead to higher conversion for your hotel.

The bottom line? Authenticity matters, as does relevancy and truth in advertising.

The bottom line? Authenticity matters, as does relevancy and truth in advertising. The best marketing combines the three and gets the right content in front of the right people. Do that and everyone wins (Facebook and Google will love you for it too).